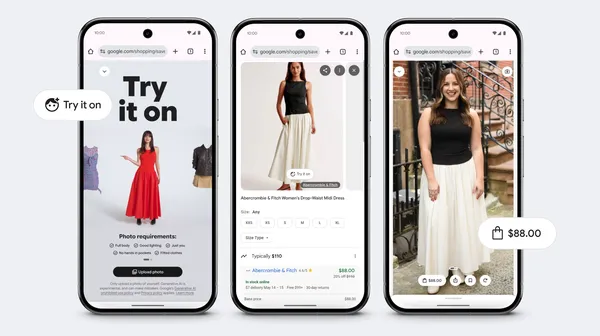

Google is pushing its AI shopping tools further with a new feature that can generate a full-body digital avatar using just a single selfie. The system relies on a lightweight image model known internally as Gemini Nano Banana, designed to work quickly while still producing realistic results. Instead of asking users to upload full-length photos, the tool analyzes facial features, body proportions, and selected size information to create a complete avatar that can be reused across online clothing try-on experiences.

This update significantly lowers the barrier for virtual fitting tools, which have traditionally required carefully framed full-body images that many users either don’t have or don’t want to upload. With the new approach, users simply take a selfie, confirm their general body size, and choose from several AI-generated avatar variations. Once selected, that avatar becomes the reference model for previewing how clothing items might fit, drape, and scale on their body type.

The avatar system is integrated directly into Google’s shopping and search experiences, allowing users to preview clothing without installing separate apps or creating new accounts. When browsing supported apparel listings, users can apply their avatar to see how items look on a body shape that more closely resembles their own, rather than relying on generic models. Google positions this as a way to reduce uncertainty when shopping online, especially for fit-sensitive items like jackets, dresses, and layered clothing.

From a technical standpoint, the shift to selfie-based generation highlights how far image models have progressed in understanding human proportions from limited input. Rather than producing a static image, the system creates a consistent body representation that can be reused across different outfits, maintaining scale and alignment between items. This consistency is critical for making virtual try-ons feel believable rather than novelty-driven.

The company also sees this as a step toward reducing product returns, a long-standing problem in online fashion retail. By giving shoppers a clearer expectation of fit before purchasing, the avatar system could help customers make more confident decisions while saving retailers the cost and waste associated with returns. While the feature is currently focused on clothing, the underlying technology could later extend to accessories, footwear, or even full outfit styling.

Privacy remains a key consideration, and Google states that the images used to generate avatars are processed within its secure systems and tied to user consent. The feature is rolling out gradually, starting with limited regions and supported retailers, with broader availability expected as the technology matures and partnerships expand.

Overall, the move signals a broader shift in how AI is being applied to everyday consumer tasks. Rather than flashy demos, Google is embedding generative models into practical workflows, using AI to quietly remove friction from routine activities like online shopping. If adoption grows, selfie-based avatars could become a standard layer in digital retail, changing how people interact with products long before they ever arrive at the door.